How to Build a YouTube MCP Server for Cursor and Windsurf AI Coding Assistants

While migrating my Learn English Sounds website from a CMS to Next.js, I hit a frustrating roadblock. I needed to find YouTube videos demonstrating each English phoneme, but my AI coding assistants (Cursor and Windsurf) couldn't search YouTube directly.

This meant I had to manually verify each video suggestion, which interrupted my workflow. I'd get a recommendation, check if it existed, then return to coding. Not a huge problem, but definitely inefficient.

To streamline this process, I built a simple Model Control Protocol (MCP) server for YouTube. It lets my AI coding tools search and retrieve actual video information without my intervention.

This guide explains how to build your own MCP server. It's a straightforward project that can save you time if you frequently need your AI coding assistant to access external services like YouTube.

What is an MCP?

Imagine you're using an AI assistant like Claude or GPT, but it can't access the internet or other services directly. An MCP (Model Control Protocol) is like a translator that helps the AI talk to these services.

Think of it this way: if you speak English and your friend speaks Spanish, you need a translator to communicate. Similarly, an MCP server translates between an AI tool (like Cursor or Windsurf) and an external service (like YouTube). It follows specific rules so both sides understand each other perfectly.

In technical terms, it's a standardized API server that follows specific formats so AI tools can request and receive data without human intervention. The protocol defines how requests are formatted, how responses should be structured, and how errors are handled.

Notably, Anthropic has open-sourced their Model Context Protocol, which enables developers to build secure connections between data sources and AI tools. Their implementation includes SDKs, local server support in Claude Desktop apps, and pre-built servers for popular systems like Google Drive, GitHub, and Slack.

Why I needed this

Learn English Sounds requires videos showing proper pronunciation for each phoneme. Without direct YouTube access, Cursor or Windsurf would suggest videos that often didn't exist, requiring me to verify each suggestion manually.

Example workflow before the MCP:

Me: "I need videos for the 'th' sound."

Cursor or Windsurf: "I recommend 'English TH Sounds - How to pronounce TH correctly'."

Me: "Does that actually exist? What's the channel?"

Cursor or Windsurf: "It should be on the 'English Pronunciation' channel."

Me: *searches YouTube* "That channel exists but that video doesn't."

Building the YouTube server

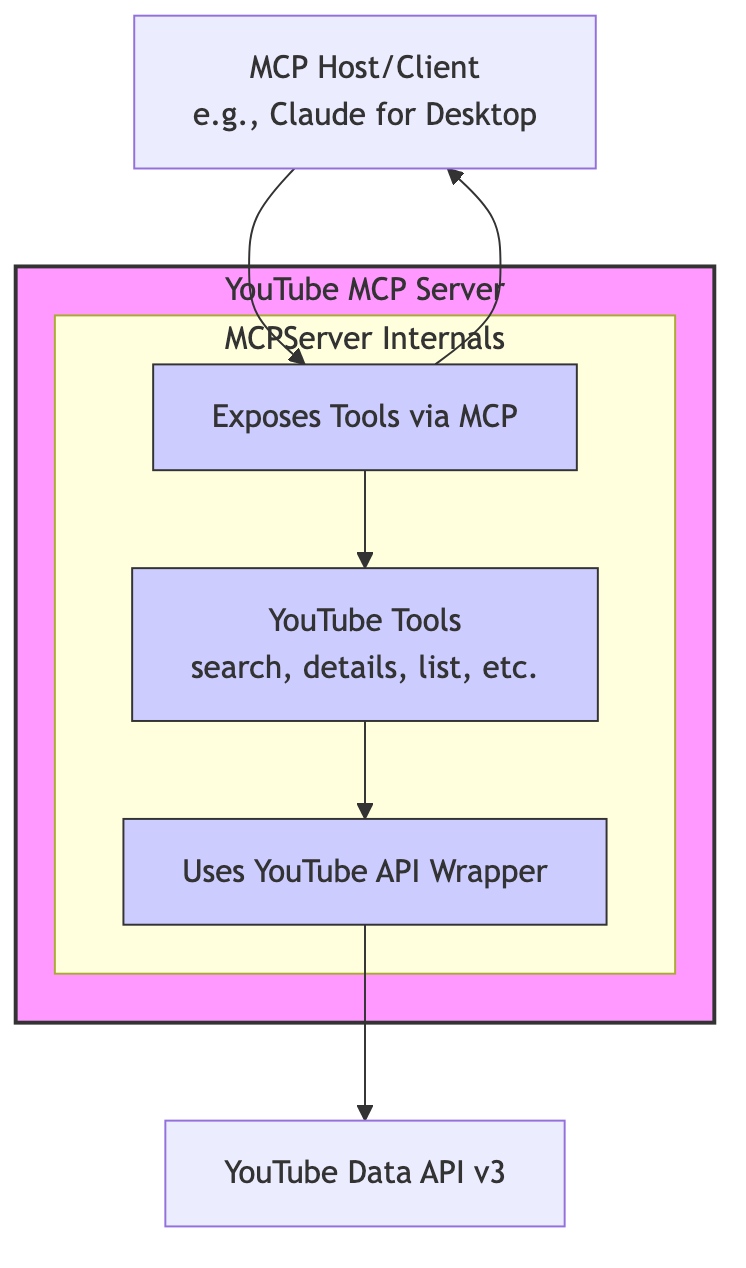

I built the server using Python with FastAPI, connecting to the YouTube Data API. Here's the architecture diagram showing how it works:

The main challenge was managing YouTube's API quotas and ensuring the server followed the MCP specification correctly.

How it works

With the MCP server, Cursor or Windsurf can now search YouTube directly and provide actual videos with view counts and ratings. This reduces the back-and-forth and allows me to focus on development.

New workflow:

Me: "Find videos for the 'th' sound."

Cursor or Windsurf: *queries MCP server*

Cursor or Windsurf: "I found these options with view counts and ratings. Which would you prefer?"

Me: "The first one."

Cursor or Windsurf: *adds embed code with correct video ID*

Technical implementation details

Key technical considerations when building an MCP:

1. Authentication: The MCP spec doesn't have a standardized auth method. I used a YouTube API key stored in an environment variable (.env file).

2. Response format: AI tools expect specific response formats. The format varies by endpoint, but here's an example from the search_videos endpoint:

{

"videos": [

{

"title": "How to Pronounce TH - English Pronunciation Lesson",

"videoId": "dQw4w9WgXcQ",

"channelTitle": "English Pronunciation",

"description": "Learn how to pronounce the TH sound in English correctly."

}

]

}

And here's an example from the get_video_details endpoint, which returns more comprehensive information:

{

"title": "How to Pronounce TH - English Pronunciation Lesson",

"description": "Learn how to pronounce the TH sound in English correctly.",

"channelTitle": "English Pronunciation",

"publishedAt": "2023-04-15T14:30:00Z",

"duration": "PT5M30S",

"viewCount": "1234567",

"likeCount": "12345",

"commentCount": "1234"

}

3. Error handling: Consistent error formats are essential as AI tools can be confused by unexpected responses. My implementation includes specific error handling for different scenarios:

- Missing API key: "Failed to initialize YouTube service. Check YOUTUBE_API_KEY environment variable."

- Invalid API key: "An HTTP error 400 occurred: [error content]. This might indicate an invalid or missing API key (YOUTUBE_API_KEY)."

- Resource not found: "Video with ID '[video_id]' not found."

- General HTTP errors: "An HTTP error [status] occurred: [error content]"

Why this matters for AI coding tools

When you're coding with AI assistants like Cursor or Windsurf, you're constantly making decisions based on external information. Without MCPs, these tools are essentially working blindfolded when it comes to real-time data.

Here's why MCPs are game-changers for AI coding:

- Reduced context-switching: Stay in your coding flow without jumping between applications

- Verified information: Get accurate, real-time data instead of potentially outdated or hallucinated content

- Specialized knowledge: Access domain-specific information that the AI wasn't trained on

- Customized workflows: Build MCPs for your specific needs and development patterns

According to the OpenAI documentation, MCPs provide "domain-specific knowledge with clear boundaries," making them ideal for extending AI capabilities in a controlled, predictable way. Similarly, Anthropic's Claude desktop application uses MCPs to safely connect Claude to services like Google Drive and GitHub.

Creating your own MCP

If you want to build an MCP:

1. Start with a single API endpoint that would save you the most time

2. Focus on getting the response format correct

3. Implement proper error handling

My YouTube MCP includes these endpoints:

/search_videos- Find videos matching a query/get_video_details- Get detailed info about a specific video/get_related_videos- Find videos related to a specific video/list_channel_videos- Get recent uploads from a channel/get_channel_details- Get info about a YouTube channel/search_playlists- Find playlists matching a query/get_playlist_items- Get videos in a specific playlist

Results

Building this MCP server significantly reduced my development time. Cursor and Windsurf now make better suggestions based on actual video data, and I can maintain focus on development without context-switching to search YouTube.

Popular MCP Servers and Current Uses

Several MCP servers are already being used in production environments:

- GitHub MCP: Allows AI tools to search repositories, view code, and access issues/PRs

- Google Drive MCP: Enables document search and retrieval from Google Drive

- Slack MCP: Provides access to channels, messages, and workspace information

- Firebase MCP: Enables querying and updating Firestore collections and documents

- MongoDB MCP: Provides access to MongoDB databases for AI-assisted data analysis

- PostgreSQL MCP: Allows AI tools to query relational databases and visualize results

- Jira MCP: Allows querying and updating tickets and project information

- Mermaid MCP: Helps generate diagrams from text descriptions (similar to my Mermaid automation post)

- Wolfram Alpha MCP: Provides computational and factual knowledge

These servers are particularly popular with Claude Desktop users and developers working with Cursor or Windsurf. As the MCP ecosystem grows, we're seeing more specialized servers for domains like data analysis, API testing, and documentation generation.

Try it yourself

The code for my YouTube MCP server is available on GitHub. You'll need a YouTube API key to set it up.