How to move from local deploys to fast CI/CD with GitHub Actions

I've been building a web app for pronunciation coaching to help me improve my English prosody. I'm a native Spanish speaker and figured I'd open it up in case it helps others learning English as a second language. The backend is Python with FastAPI, using Parselmouth (a Python wrapper for Praat) for audio analysis and Google Gemini for generating coaching feedback. The frontend is React with Vite and TypeScript.

For infrastructure I went with Google Cloud. The backend runs on Cloud Run, the frontend is hosted on Firebase Hosting, and user data lives in Firestore. Docker images get pushed to Artifact Registry. The code lives on GitHub. It's a fairly standard setup for a side project that I wanted to keep within free tier limits.

The problem

I was deploying both frontend and backend from my local machine. The frontend was quick enough, but the backend was painful. Every deploy meant building the Docker image from scratch, which took 5-10 minutes while pip downloaded all the Python dependencies.

I wanted to move to CI/CD so deployments would happen automatically when I pushed to main. But I also didn't want to wait 10 minutes for every push. So I set out to make GitHub Actions smart about what actually changed.

The monorepo structure

Everything lives in one repo with a frontend/ folder and a backend/ folder. The workflow triggers on push to main and I wanted it to figure out what actually changed and only deploy that part. Frontend files changed? Just deploy to Firebase. Backend code changed? Just deploy to Cloud Run. And if requirements.txt changed, rebuild the Docker base image. But only then.

Finding a way to detect changes

I searched around and found an action called dorny/paths-filter. It checks which files changed in a push and outputs booleans that other jobs can use. I set it up as the first job in my workflow:

jobs:

detect-changes:

runs-on: ubuntu-latest

outputs:

backend: ${{ steps.filter.outputs.backend }}

frontend: ${{ steps.filter.outputs.frontend }}

requirements: ${{ steps.filter.outputs.requirements }}

steps:

- uses: actions/checkout@v4

- uses: dorny/paths-filter@v3

id: filter

with:

filters: |

backend:

- 'backend/**'

frontend:

- 'frontend/**'

requirements:

- 'backend/requirements.txt'

- 'backend/Dockerfile.base'This gave me three outputs I could reference in other jobs to decide whether they should run.

Dealing with slow Docker builds

Even with change detection working, backend deploys were still slow. Every build ran pip install from scratch. My requirements.txt has ML libraries, audio processing stuff, the works. That's a lot of downloading.

I realized I maybe change dependencies once a month. The rest of the time I'm just changing application code. So I tried splitting the Dockerfile in two.

Dockerfile.base

I put all the slow stuff here: system packages, pip dependencies. The idea was to build this once and push it to Artifact Registry, then reuse it.

FROM python:3.11-slim

RUN apt-get update && apt-get install -y --no-install-recommends \

ffmpeg \

espeak-ng \

libsndfile1 \

&& rm -rf /var/lib/apt/lists/*

WORKDIR /app

COPY requirements.txt .

RUN pip install --upgrade pip && \

pip install --no-cache-dir -r requirements.txtDockerfile.fast

This one starts from the base image and just copies the code. No apt-get. No pip. Just a COPY command.

FROM us-central1-docker.pkg.dev/lescoach/prosody-repo/prosody-api-base:latest

WORKDIR /app

COPY app/ ./app/

ENV PYTHONUNBUFFERED=1

ENV PORT=8080

EXPOSE 8080

CMD ["uvicorn", "app.main:app", "--host", "0.0.0.0", "--port", "8080"]This brought build time down from 5-10 minutes to about 30 seconds, which was a nice improvement.

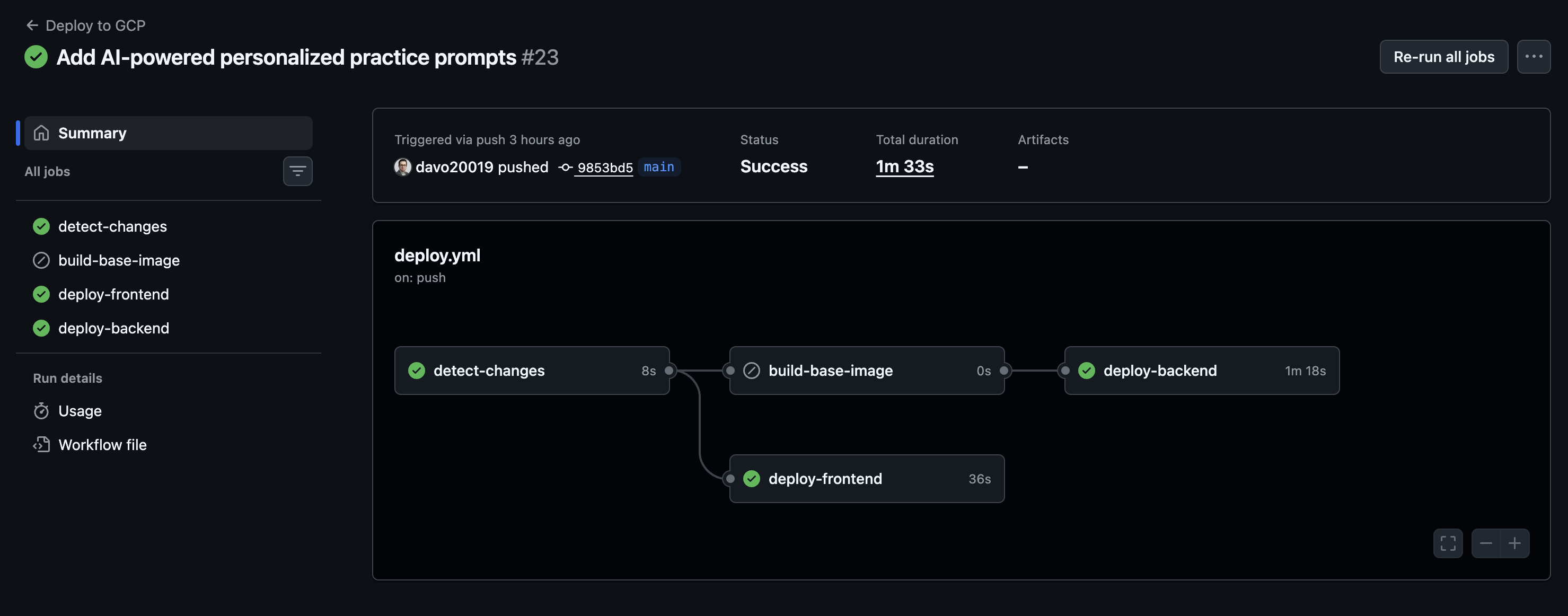

Putting the workflow together

I set up the base image job to only run when dependencies actually change:

build-base-image:

runs-on: ubuntu-latest

needs: detect-changes

if: needs.detect-changes.outputs.requirements == 'true'

steps:

- uses: actions/checkout@v4

- uses: google-github-actions/auth@v2

with:

workload_identity_provider: ${{ secrets.WIF_PROVIDER }}

service_account: ${{ secrets.WIF_SERVICE_ACCOUNT }}

- uses: google-github-actions/setup-gcloud@v2

- run: gcloud auth configure-docker us-central1-docker.pkg.dev --quiet

- name: Build and push base image

working-directory: backend

run: |

docker build -f Dockerfile.base -t ${{ env.ARTIFACT_REGISTRY }}/prosody-api-base:latest .

docker push ${{ env.ARTIFACT_REGISTRY }}/prosody-api-base:latestThe backend deploy job took some trial and error. It needed to wait for the base image build if it ran, but also work when it was skipped. I ended up with this:

deploy-backend:

runs-on: ubuntu-latest

needs: [detect-changes, build-base-image]

if: |

always() &&

needs.detect-changes.outputs.backend == 'true' &&

(needs.build-base-image.result == 'success' || needs.build-base-image.result == 'skipped')

steps:

# auth steps...

- name: Check if base image exists

id: check-base

run: |

if docker manifest inspect ${{ env.ARTIFACT_REGISTRY }}/prosody-api-base:latest > /dev/null 2>&1; then

echo "exists=true" >> $GITHUB_OUTPUT

else

echo "exists=false" >> $GITHUB_OUTPUT

fi

- name: Build base image if missing

if: steps.check-base.outputs.exists == 'false'

working-directory: backend

run: |

docker build -f Dockerfile.base -t ${{ env.ARTIFACT_REGISTRY }}/prosody-api-base:latest .

docker push ${{ env.ARTIFACT_REGISTRY }}/prosody-api-base:latest

- name: Build and push app image

working-directory: backend

run: |

docker build -f Dockerfile.fast -t ${{ env.ARTIFACT_REGISTRY }}/prosody-api:${{ github.sha }} .

docker push ${{ env.ARTIFACT_REGISTRY }}/prosody-api:${{ github.sha }}

- uses: google-github-actions/deploy-cloudrun@v2

with:

service: prosody-api

region: us-central1

image: ${{ env.ARTIFACT_REGISTRY }}/prosody-api:${{ github.sha }}

flags: --allow-unauthenticatedI learned that always() is needed there. Without it, the job won't even evaluate its condition if an upstream job was skipped. I also added a docker manifest inspect check to handle the case where the base image doesn't exist yet, like on a first deploy or if someone accidentally deleted it.

The frontend part

The frontend job ended up being simpler since it doesn't depend on the backend at all:

deploy-frontend:

runs-on: ubuntu-latest

needs: detect-changes

if: needs.detect-changes.outputs.frontend == 'true'

steps:

- uses: actions/checkout@v4

- uses: actions/setup-node@v4

with:

node-version: '20'

cache: 'npm'

cache-dependency-path: frontend/package-lock.json

- run: npm ci

working-directory: frontend

- run: npm run build

working-directory: frontend

- uses: FirebaseExtended/action-hosting-deploy@v0

with:

repoToken: ${{ secrets.GITHUB_TOKEN }}

firebaseServiceAccount: ${{ secrets.FIREBASE_SERVICE_ACCOUNT_LESCOACH }}

channelId: live

projectId: lescoach

entryPoint: frontendIf only frontend changed, this is the only job that runs.

Auth setup

I went with Workload Identity Federation instead of service account keys. It was more setup upfront but I liked not having to deal with rotating keys or worrying about JSON files sitting in secrets. GitHub gets short-lived tokens through OIDC which felt cleaner to me.

Where I ended up

Frontend-only changes now deploy in under a minute. Backend code changes take about 90 seconds. Dependency changes still take around 4 minutes, but those are rare for me.

Most days I'm tweaking UI stuff and it just deploys quickly now. The whole workflow ended up being about 130 lines of YAML. The paths-filter action does most of the heavy lifting for figuring out what changed.